These days the education market is being flooded with products that claim to be “research-based” or “evidence-based”. For educators who are always looking for a program, product, or practice that will help students, it is easy to become overwhelmed by all the hype. How do we sift through the claims made by the creators and sellers of products or practices to truly distinguish the good from the bad??

We need to improve our ability to think about research critically and draw our own conclusions, just as we teach our students to do. At the RSE-TASC, we are exploring ways to help educators in our region critically read research in order to identify effective instructional practices. One of the first steps is to make sure we have a common vocabulary for the discussion.

“Research-based” vs. “evidence -based”: What is really the difference?

As stated above, the education market has been flooded with products and practices that claim to have research behind them. This is probably true, but all that means is that someone did some research on either the practice or the underlying principles. “Research-based” does not mean that the practice has been proven to be effective because it says nothing about the quality or outcomes of the research conducted on the practice. What we should be looking for are evidence-based practices or interventions (EBPs).

In their article Unraveling Evidence-Based Practices in Special Education, Cook and Cook (2011) define EBPs as those that are supported by multiple studies with acceptable research designs, i.e. experimental, quasi-experimental, or single-subject studies, and that demonstrate meaningful effects on student outcomes as determined by effect size (more on this below).

When a practice is repeatedly shown to be effective by these standards, it can be called “evidence-based”. So how do we know if there is a meaningful effect?

Effect Size? What’s That?

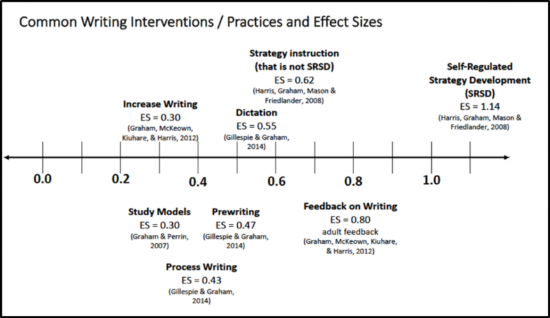

An effect size tells you not only if a practice or intervention is effective, but how effective. If you are trying to determine if a study shows that a practice will improve your student outcomes, search the article or webpage for the effect size. It will usually be a number between – 3.0 to + 3.0. A negative effect size means the performance of students receiving the intervention actually decreased and a positive effect means performance increased; while an effect size of zero means that the intervention had no effect on student performance. As readers we always want to look for an effect size of +0.3 or higher. General guidelines accepted in the field are that an effect size of +0.8 is a large effect, +0.5 a moderate effect and +0.2 a small.

Help! I Don’t Have Time to Read and Interpret all the Research!

There are many websites that have sifted through the research for you. One example of a high quality website is shown in the School Tool on Page 3. For educators who want to make their own judgments, the best way to find evidence-based practices or to check on a practice that you have been using, is through reading meta-analyses.

A meta-analysis is a “study of studies” where the authors collate all of the published research on a practice and determine the effectiveness of a practice across multiple studies. A high quality meta-analysis will include the standards of inclusion, an analysis of the data resulting in an overall effect size based on the outcomes of all of the studies, and a discussion of the effectiveness of the practice.

As educators our job is to ensure that we provide our students with the best instruction possible, so we must become more astute readers of research. When you are presented with a practice that is advertised as “evidence-based”, ask yourself, and the vendor, about the quality of the research and the effect size. Finding a meta-analysis of the practices behind the program is a great way to start.

References

Cook, G.G. & Cook, S.C. (2011). Unraveling evidence-based practices in special education. Journal of Special Education, 47(2), 71.82.

Data-Driven Decision-Making

Data-Driven Decision-Making  Increasing Post-School Success through Interagency Collaboration

Increasing Post-School Success through Interagency Collaboration  How Can We Improve Deeper Learning for Students with Disabilities?

How Can We Improve Deeper Learning for Students with Disabilities?  Positive Classroom Management: Creating an Environment for Learning

Positive Classroom Management: Creating an Environment for Learning  Self-Determination Skills Empower Students of All Ages

Self-Determination Skills Empower Students of All Ages  Fidelity of Implementation: What is it and Why does it Matter?

Fidelity of Implementation: What is it and Why does it Matter?  Rethinking Classroom Assessment

Rethinking Classroom Assessment  A Three-Step Approach to Identifying Developmentally Appropriate Practices

A Three-Step Approach to Identifying Developmentally Appropriate Practices  Transforming Evidence-Based Practices into Usable Innovations: A Case Study with SRSD

Transforming Evidence-Based Practices into Usable Innovations: A Case Study with SRSD